Governments, big tech and civil society organizations around the world are taking first steps to tackle disinformation and computational propaganda. We identify the weaknesses of the current measures and recommend ways forward from a digital technology point of view.

A digital solutions perspective

Last week’s terror attack in Hanau, Germany, revives the debate about the role of online disinformation and computational propaganda in stoking extremist violence. Incendiary remarks by far-right AfD party members (about shisha bars in this instance) are widely held responsible for inciting this and previous acts of terrorism in the country.

Similar crises around the world, including the far-right terror attacks in Christchurch, New Zealand and El Paso, USA in 2019, are the result of online hate spread on social media and forums. In 2017 and 2018, a series of targeted false rumours spread on WhatsApp led to 46 lynchings across India. The Rohingya genocide and ethnic violence in Myanmar is now considered to be the result of fake news and propaganda.

Moreover, new subcultures are emerging on the internet, for example the so-called “incels” or “involuntary celibates” – men who are unable to find romantic or sexual partners, and therefore justify violence against women. The “incels” network is linked to at least four mass murders that took 45 lives in North America.

Worldwide academic institutions, governments, think tanks, and even big tech platforms like Google, Facebook and Twitter, have joined the discussion and started taking action over the last two years. A significant number of recommendations has been produced, most comprehensively by the NYU Stern Center for Business and Human Rights, the European Union and its member states, as well as a sub-committee of the UK Parliament.

However, as a digital solutions provider (i.e. a middle party between big tech platforms and their customers) our assessment is that many of the measures and recommendations so far are rather reactive than proactive. They also overly rely on the resources, reports and goodwill of the companies running the digital platforms.

We first discuss six systemic weaknesses, followed by our own recommendations.

Monitoring systems are easily deceived and bypassed

Technology changes constantly and in unexpected ways. Like in computer security, where for every new defence there is also a new virus, it should be natural to expect a new loophole for every new solution or regulation against disinformation. Eliminating the loopholes won’t be easy, but a more forward-looking approach will hasten the process.

For example, bot networks and inauthentic accounts are being identified by AI or simpler algorithms that detect patterns in their activity. Internal tracking software could show that thousands of Twitter accounts tweet 50 times a day at a similar interval, and about very similar topics. The software could then flag and suspend every account that tweets 50 times a day during a certain time range.

However, a smart “propaganda coder” can circumvent the filters by instructing the bots to post a random number of posts each day and at random times. Moreover, the bots could be programmed to occasionally post random content, too. This has been observed in several studies.

Our own research suggests that it is still very easy to disguise a bot or inauthentic account as a real social media user. Add to this the fact that AI is making it much easier for bots to communicate like human beings.

Social media safety options can backfire

Social media safety features can defeat their own purpose, as they, too, can be easily manipulated.

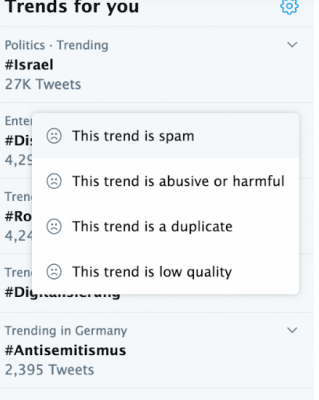

Twitter has an option for users to report harmful content and recently also introduced the ability to report trending topics as spam, abusive or harmful, duplicate or low quality. Our team observed how authentic tweets and trends that are critical of the authoritarian government of Thailand soon disappeared from the hashtag results and trends list, despite very high engagement and conversations.

Only yesterday, a leak of Thai government documents confirmed that large numbers of army recruits collectively report genuine tweets (and trending topics) as spam or fake news, mainly those of their opponents and critics.

This kind of manipulative action abuses the safety measures set in place by Twitter and similar platforms. It silences legitimate sentiments, criticism and facts, while paving the way for systematic disinformation.

Big tech platforms’ transparency reports barely scratch the surface

Although much has been promised by big tech corporations – and there certainly is some progress – more needs to be done.

Our analysis of Google and Facebook’s ad transparency reports during the UK 2019 General Election revealed several problems with viewing and interpreting crucial information about political advertising activity. Expenditures, the number of people reached and targeting criteria were vague and unclear.

As a company that manages digital campaigns, we are surprised that the reports produced by the internet giants are hard to navigate and only contain a tiny fraction of the data points that are readily shown to advertisers in real time. Any digital marketing manager or search engine or social media specialist will confirm that this data is easily available.

This begs the question of how accurate all other reports produced by big tech might be.

We will not speculate on whether this is done intentionally or not. However, this indicates that the burden of transparency and auditing should be shared, including by more tech-savvy public watchdogs or authorities.

Micro-targeting and political ads are a red herring

Micro-targeting, a form of paid advertising on Google, Facebook, Twitter and other big tech platforms, received a lot of attention over the past two years. In essence, it allows advertisers to tailor their campaign messages according to different user characteristics based on powerful data-driven algorithms. Malicious actors have abused this to target their audiences with disinformation or incendiary information, notably in the case of Cambridge Analytica and AggregateIQ.

The backlash led to several reactions, including Twitter announcing a complete ban on all political ads and advocating a narrative in favour of “earned” visibility on the platform.

As our co-founders explained at the United Nations IGF last November, this is clearly a “red herring”, because also the visibility of normal, non-paid content is determined by powerful data-driven algorithms. Banning political ads also diverts the responsibility of deciding what content can be deemed “earned” to millions of Twitter users which may include armies of fake accounts, bots and of course manipulators.

At the same time, newer politicians without an existing following can find it much harder to gain visibility on time for an election or referendum. This seems to be unfair considering our finding that non-political actors who are allowed to advertise do indeed post political content without being restricted by Twitter.

While we welcome stronger regulation of online political ads, such as the efforts of the UK Parliament’s APPG on Electoral Campaigning Transparency, this should be done fairly and without diverting the attention from the other sources of disinformation and propaganda.

The far-right AfD party in Germany is shown to have risen in popularity thanks to fake accounts and bots on social media rather than micro-targeting and political ads, a case we raised at an APPG session with UK MPs Stephen Kinnock, Caroline Lucas and Deirdre Brock as well as the Electoral Commission.

Google search results lack accountability

More subtle (and rarely mentioned) is the fact that also Google search results contribute to algorithmic disinformation. Google (and other search engines) list websites based on algorithms that favour a range of criteria.

A good Search Engine Optimization (SEO) specialist will understand these criteria and be able to manipulate the results, making it possible to find content from unreliable sources (for example, a blog with far-right extremist views) before reliable ones (for example, a well-established and trusted national newspaper).

Our research suggests that while Google appears to prioritize national newspapers, also unknown blogs with unreliable information can rank top in the search results for a wide range of search terms.

What about Reddit, Goodreads, Quora, StackExchange, discussion forums, review communities…?

Although most people today use large social media platforms like Facebook and Twitter, narrow topic-specific information is found, shared and discussed on smaller online communities.

Discussion forums about specific topics can contain plenty of fake users or impersonators, yet often rely on volunteer and amateur moderators. The “off topic” sections within forums related to travel, technology, health and relationships can contain loaded discussions about politics and thus also plenty of misinformation.

Anyone who checked has Amazon or Goodreads reviews before buying a book, or any other review site before booking a trip, hotel or restaurant, will have come across a large number of fake reviews. While many just aim to increase sales for particular books, also sinister messages pushing a particular agenda can be found in books about politics and current affairs.

Reddit in particular is known to have hosted discussion groups that promote hatred and violence based on false information, including one that led to the Charlottesville car attack in 2017.

Very little has been done on these and the numerous smaller, less visible online communities which are potential breeding ground for extremism.

Recommendations: accountable sources, genuine users and more public transparency

The above issues highlight that most of the current and recommended safeguards against disinformation can be tricked. Relying on big tech platforms to self-regulate also yields lacklustre results. It is certainly welcome, but not sufficient.

Our recommendations are an attempt to walk the extra mile and proactively close “low-hanging fruit” loopholes – and we recognize that also these may need to evolve over the years.

- Accountable sources prioritized on platform search results

Search engines and social media platforms rank their search results based on different criteria fed into their proprietary algorithms. We have noted that the results can be manipulated and contribute to spreading false information.

While it may be farfetched to expect the big tech companies to reveal the inner workings of their algorithms in full, or even to get them involved in deciding what information is accurate, it should be an easy task for them to instruct their programs to give default priority to trusted and accountable sources of information, such as verified and official accounts of newspapers, think tanks, government agencies and research and education institutions.

Although big tech companies appear to have taken some steps in this direction, results indicate that the outcome is not always consistent.

- Identity verification for all users or account owners

Today, anyone can open a new Facebook, Twitter, Quora or Reddit account by simply confirming an e-mail address or phone number. While this makes it easier for people to sign up, it is also the most obvious reason why anyone can create any amount of fake accounts and spread misinformation.

Because AI cannot detect every fake account, a verified identity is a safer guarantee that an account is genuine. Several platforms already ask for an ID, or at least have an option to submit one in order to gain a “verified member status” (not to be confused with Twitter verified profiles, which are currently reserved only for popular or public figures). Measures like these could be required by all social media and forum or forum-like platforms.

This shouldn’t mean that users cannot use multiple accounts or stay anonymous anymore. There are various scenarios in which both are justifiable and a fundamental human right.

For example, a user could own a single personal account, but also a different account for their business, one for their university project and one for their music band.

Or a user may live in an authoritarian country which cracks down on dissent, and thus will want to report on the regime while keeping their identity private. One possible answer to this is a robust technology solution that could safely confirm someone’s identity, but simultaneously protect their personal data from unintended audiences – which may include the social media platforms they sign up on itself and authoritarian regimes. This may be run by an independent party operating in a country with strong data protection laws and enforcement.

It should be noted that IDs can be faked and foreign ones harder to verify, too. Newer technology solutions that use facial recognition or blockchain could offer safer, privacy-oriented, efficient and global standards of identity verification for social media platforms, forums and online communities.

- Extended transparency reports and tech-savvy audits

Several platforms, Facebook in particular, went to great lengths to increase transparency on micro-targeting and political ads activity, including a browseable report of ads and the location of the advertisers. While these reports are currently very limited, they could easily be extended both in depth and scope.

In depth, the data should be more accurate and granular, reporting exact expenditure, views and the demographic targets of the advertised content.

In scope, not only advertised content should be covered, but also normal “organic” posts that, to the public, could look authentic but actually hide fake information.

Finally, these reports should be audited by tech-savvy social media and search engine specialists who are deeply familiar with the potential incongruences and discrepancies of the platform data.

- A real-time propaganda database shared among governments, big tech and the public

It is widely acknowledged that social media platforms cannot be fact-checkers. It is also dangerous to allow a single body to decide what is true and fake, as they could still push biased narratives.

Moreover, false information that is removed from Twitter can resurface again on Facebook or Reddit or any other less known platforms.

A more collaborative effort that involves multiple stakeholders will be less vulnerable to bias and repeated attempts to repost the same disinformation on different platforms.

One possible solution would be a central, real-time database of propaganda, disinformation and hate messages found across all platforms that is accessible to and managed together with the government, the public and other watchdogs. Platform owners would be required to ensure that any message on this register should be automatically banned from their content.

To conclude, the general direction of these recommendations is to create a safe and accountable digital ecosystem that prioritizes genuine users and trusted content, and where all sources of online information are tracked and transparent to the public.

Further Reading & Credits

- Tackling Domestic Disinformation (NYU Stern, 2019)

- Tackling online disinformation (European Commission, 2019)

- UK General Election: Review of Google and Facebook’s political ad transparency reports (Worldacquire, 2019)

- Defending our Democracy in the Digital Age (Report from the APPG on Electoral Campaigning Transparency)

- The Rise of Germany’s AfD: A Social Media Analysis (Juan Carlos Medina Serrano, 2019)

- The Brexit Botnet and User-Generated Hyperpartisan News (Bastos and Medea, 2017)

Header image: Clint Patterson

About Worldacquire

Worldacquire is a political tech firm in London providing digital strategy, advertising and analytics solutions. We managed election campaigns in Hong Kong, contributing to the 2019 landslide pro-democracy victory, and in Thailand, supporting one of the country’s first LGBTQ+ candidates, using technology to cut through electoral red tape. At the United Nations Internet Governance Forum, we called for a fair and balanced regulation of online political advertising and greater transparency of other sources of digital manipulation. We work with governments, think tanks, intergovernmental organizations and social ventures to understand and apply digital technology, A.I. and algorithms effectively and ethically.